Cutting through the clutter :

Navigating Compliance in Education

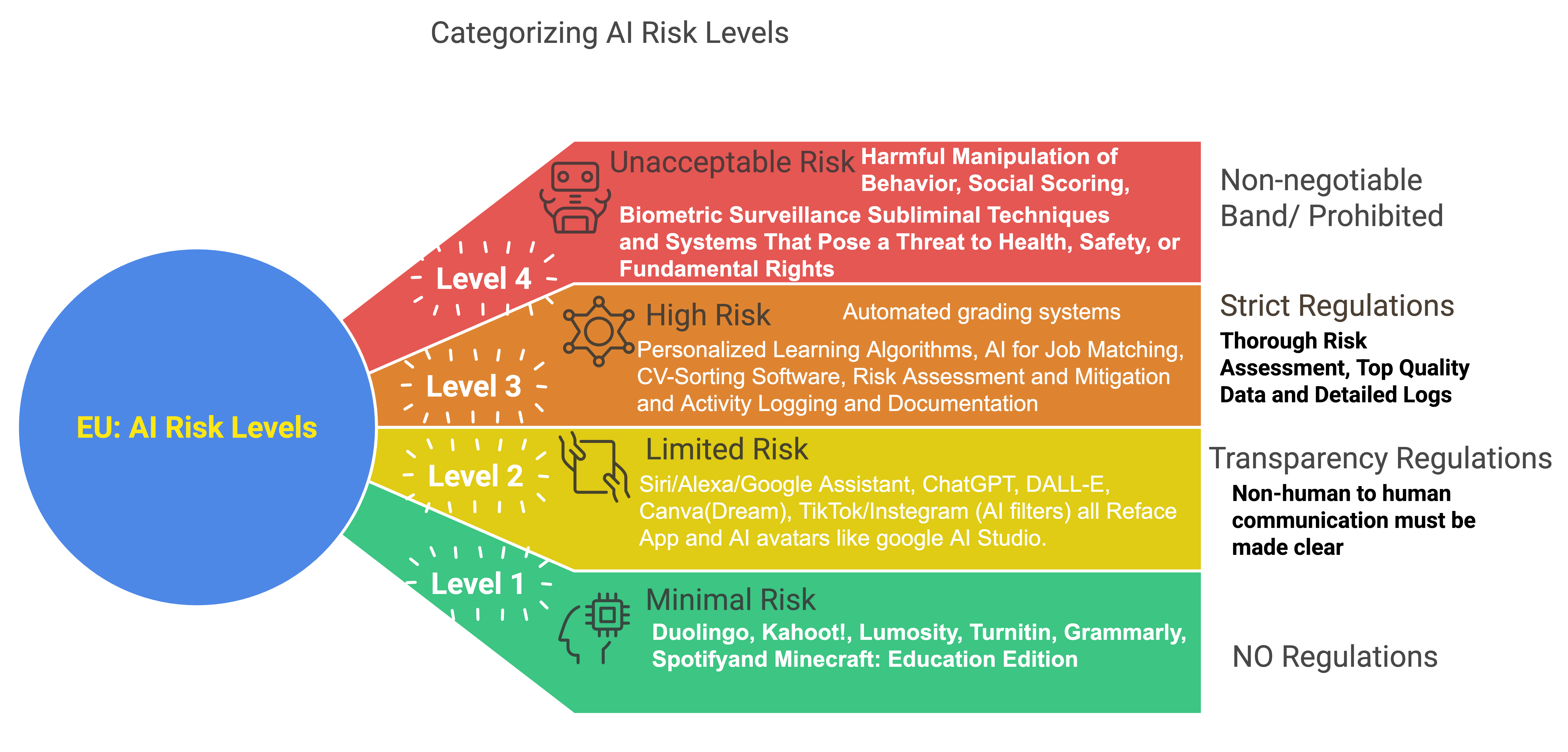

Artificial Intelligence (AI) is transforming education, offering immense opportunities but also posing significant risks. To ensure the ethical and responsible integration of AI in schools, the EU AI Act categorizes AI systems into four risk levels. This page provides an overview of these risk levels, examples of each, and actionable steps for schools to comply with regulations.

Level 4: Unacceptable Risk (Prohibited)

These AI systems pose a severe threat to human rights and safety and are strictly prohibited under the EU AI Act.

Examples of Unacceptable Risks:

- Harmful Manipulation of Behavior: AI using subliminal techniques to exploit vulnerabilities.

- Social Scoring Systems: Ranking students based on behavior or personal traits.

- Biometric Surveillance: Tools such as facial recognition that monitor students without consent.

What Schools Must Do:

- Identify and remove any prohibited AI applications from use.

- Educate staff on recognizing these systems to ensure they are not inadvertently adopted.

Level 3: High Risk

High-risk AI systems can significantly impact students’ educational outcomes. These tools require strict compliance with transparency and safety regulations.

Examples of High-Risk Systems:

- Automated grading tools.

- Personalized learning algorithms.

- AI systems for job matching or career guidance.

- Risk assessment tools for student monitoring.

Compliance Steps for Schools:

- Conduct Risk Assessments: Evaluate each high-risk system for potential biases and privacy concerns.

- Maintain Detailed Logs: Ensure all decisions made by these systems are documented.

- Regular Audits: Periodically review these tools to ensure they meet ethical and legal standards.

Level 2: Limited Risk

Limited-risk AI tools require transparency but pose minimal threats to safety or rights. These tools can be effectively used with proper oversight.

Examples of Limited Risk Tools:

- Voice assistants like Siri or Google Assistant.

- AI-driven creative tools like Canva Dream or DALL-E.

- Social media filters from TikTok or Instagram for educational projects.

Transparency Requirements:

- Clearly label AI use in these tools to ensure users are aware.

- Provide training for students and staff on how these tools function and their limitations.

Level 1: Minimal Risk

These AI tools are safe and require no additional regulations. They can be integrated into classrooms to enhance learning without compliance burdens.

Examples of Minimal Risk Tools:

- Duolingo for language learning.

- Kahoot! for interactive quizzes.

- Grammarly for writing assistance.

- Minecraft: Education Edition for creative learning.

How to Use These Tools:

- Encourage teachers to incorporate them into lesson plans.

- Promote student exploration and independent use for engagement.